It’s not enough that your code is readable: it must perform well too.

In this post, I will show a list of common antipatterns in TSQL coding that make code perform badly.

They are cumulative. Just don’t make these mistakes, and you’ll be much better off.

1- Not making predicates SARGable

What is SARGable?

Sargable is a word that links together the terms: search, argument, and able.

A SARGable predicate means that SQL Server can perform an index seek using your search conditions (if an index exists).

When the query uses indexes more effectively, the result is less consumption of system resources and more performance.

Sargable and NON-SARgable Operators

SARGable predicate includes the operators:

=, >, >=, <, <=, IN, BETWEEN, and LIKE /*(in the case of prefix matching)*/

A non-sargable predicate requires a higher level of the SQL server to take action.

Typically, in these cases, the SQL server can not isolate the single value or range of index key values to process.

Performance differences

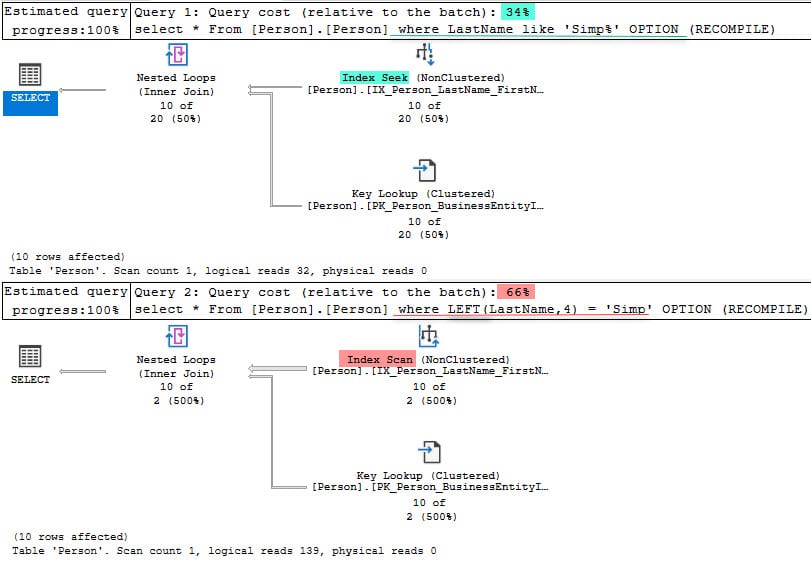

As shown in the example below, both queries are equivalents.

Figure 1 – SARGable predicates sample.The first query is using an INDEX seek (SARGable) and reads 32 pages.

The non-sargable is using an Index scan and reads 139 pages – 4x more reads.

Note: Even though both queries are utilizing the index, there is a higher cost in the INDEX SCAN operation.

2- Using user-defined scalar functions in predicates

it’s not very hard to have problems with user-defined functions.

Prevent the proper use of indexes

Most of the functions that you run against your columns in WHERE and ON clauses will prevent the proper use of indexes.

Inaccurately execution plan costs

SQL Server evaluates your query to determine the best and most efficient execution plan based on data estimation.

Data estimation depends on statistics created on the tables involved in the query.

SQL Server’s Optimizer is cost-based, and these estimations are essential to determine the “plan cost.”

The optimizer cannot accurately estimate the cost of the scalar UDF when you use a UDF in the predicate.

Scalar functions are not set based

User-defined scalar functions perform much like a cursor, causing row-by-row processing. So, in most cases, it will result in a lousy performance.

Try converting it to a table-valued function

Often you can convert them to an inline table-valued function (TVF) using a CROSS APPLY operator.

Not every conversion is going to increase performance. In any situation, proper testing and understanding of the features are essential for a better solution.

In this article, Simon Liew gives more details on how to refactor SQL Server scalar UDF to inline TVF.

3 – Ignoring data types

It is simple in concept but seems to be surprisingly difficult in practice.

Choosing the right data types for SQL Server objects not only improves performance by ensuring a correct execution plan, but it also increases data integrity.

Data types matter

Do not believe me? Check this post: Why data type choices matter?

Implicit conversions

When you mismatch data types in a WHERE clause (or JOIN condition), SQL Server needs to convert one, automatically, based on its Data Type Precedence rules.

The result is that indexes will not be used efficiently, and you’ll burn CPU in the conversion process.

In short, not all implicit conversions are a problem.

However, it’s still a best practice to use query parameters or variable datatypes to make them match the table.

4 – Not using OPTION (RECOMPILE)

As you may have heard before, you should not merely include WITH RECOMPILE in every stored proc as a matter of habit.

By doing that, you will eliminate one of the primary benefits of stored procedures, the fact that the query plan will be cached and re-used.

Parameter sniffing

A good reason to force a procedure to recompile is to counteract the parameter sniffing behavior of procedure compilation.

Dynamic SQL

Usually, statistics are automatically updated when the rows that you are querying changes drastically from call to call.

However, if you are building dynamic SQL or calling SQL within a tight loop, there is a possibility you are using an outdated Query Plan based on the wrong drastic number of rows or statistics.

Again, in this case, the OPTION (RECOMPILE) can be useful.

Temporary table populated within the store procedure

Try to use RECOMPILE hints on individual statements when you have a query that references a temp table that is filled within the store procedure.

It will ensure that when your execution plan is created, it will be using the latest information. You might suddenly get some significant improvement.

Note: Be careful in OLTP environments, avoid use recompile options in the stored procedure that are executed very often.

5 – Not JOINing on keys

Almost always, when you ever join multiple tables, you want to join them on the keys, whether it’s a primary key or an alternate key or a unique index.

If you don’t, you have the risk of retuning multiple rows (duplicated rows).

Unnecessary DISTINCT

What people usually do when having multiple rows is to use DISTINCT or GROUP by to remove the duplicate rows.

The final result is more cost for your query.

6- Using unessentially Order by

It is always bad news if your query is using the SORT operator. It is worse if you get a warning that the sort operations are spilling into TempDB.

As we can see below, the sort operation can easily represent 93% of the entire cost of the query.

If you are getting SORT warnings, this is a call for action, because on a hard-working server this will lead to slow-running queries that will lead to even more demands on TempDB.

Sort in the presentation layers

Sorting in the database can be pretty bad; avoid where possible.

If you can offload it to the app or presentation layer, that’s usually the best choice.

NOTE: Keep in mind that SQL may inject Sorts into an execution plan even if you don’t call ORDER BY explicitly, to assist with aggregations, windowing functions, etc.

7 – Having too many JOINS

When you join two tables together, the optimizer looks at the table’s statistics to determine cardinally estimates coming out of that join.

When you have five tables, for example, SQL does not do a single of all five, it joins two, and then joins the third one, and so on.

So, each one of these joins adds more potential discrepancy or error in the Cardinality.

Reduce optimizer potential to make bad choices

When optimizer gets off the Cardinality, it increases the potential to make bad choices. It can use a nested loop instead of a hash or something, and your performance goes down.

There is not a magic number for joins. Usually, when a query has a performance problem and has five or more joints, it is a good idea to look if many joins are not one of the causes of poor performance.

Limit the error introduced

One solution is to use a temp table to split the query.

Reducing the path, you are limiting the error that SQL optimizer can introduce.

8 – Using Union instead of Union all

In MS SQL Server, a UNION statement is a UNION that eliminates duplicates.

To do that, as shown above, it has to perform a sort operation across the entire returned rowset, sorting by every column, which can be very expensive.

The alternative is a UNION ALL. Often a UNION ALL is what’s needed, and avoiding performance problems.

9 – Over-using views

Views are great!

The big problem with the views is that someone can put lousy code into a view, and therefore made it reusable.

Unnecessary Information

Sometimes, people use a view to returning data that can be grabbed directly from a table.

As you can see below, both queries are very similar (same result set).

SELECTve.[FirstName],

ve.[LastName],

vs.[SalesYTD]

From [Sales].[vSalesPerson] vs

INNER JOIN [HumanResources].[vEmployee] ve

ON vs.BusinessEntityID = ve.BusinessEntityID

SELECTp.[FirstName]

,p.[LastName]

,s.[SalesYTD]

FROM [Sales].[SalesPerson] s

INNER JOIN [HumanResources].[Employee] e

ON e.[BusinessEntityID] = s.[BusinessEntityID]

INNER JOIN [Person].[Person] p

ON p.[BusinessEntityID] = s.[BusinessEntityID] /

But, when we see the queries plan, we found a huge difference.

In this case, the first query using views have many joins undercover, causing BAD performance.

Nesting views

There are cases where views use other views inside them, in a cascade.

The more involved they get, the more problematic and inconsistent is the result.

In addition to the performance reasons, there are other reasons not to use nested views.

Supporting and maintaining nested views can be a nightmare.

10 – Allowing “row by row” processing

Last but not least, this issue comes from using cursors or WHILE loops instead of set-based operations, resulting in extremely slow performance.

Quick performance death

What is a cursor in SQL Server and why do you need it?

Cursors are used frequently as the wrong tool for the wrong task, especially when operating on a large set of data.

Usually, a developer uses them for quick programming when he does not have a full understanding of set operations.

Avoid the use of cursors

Cursors are just something to be avoided outside of areas where you have no choice, like maintenance routines that have walk tables or databases.